A collaborative robot for industrial work environments that takes inspiration from fitness routines on gym machines for a physically more sustainable work modality.

Machines in industrial workplace environments have notoriously been a source of harm for human health. Machines in gyms, instead, can help improve both health and fitness. This observation has been the starting point for this project. As people make use of machines in gyms or similar exercise setups to improve their health, why not take inspiration from that and develop machines for the factory floor that do the same?

This project leverages sensor-based technologies to monitoring human physique, condition, and behavior in real time to dynamically adjust to these differences for physical collaboration between a human worker and a robot in industrial workplace environments. We analyzed human movements and behavior on the factory floor and identified work tasks that resembled fitness routines. Leveraging a human-robot collaborative setting, we sought to emulate the fitness routine experience in a workplace-related task.

The system takes into account the worker’s height and weight, and by monitoring the worker’s movement metrics and heart rate, the robot autonomously adjusts its behavior to that of the human worker, thereby creating a seamless physical interaction to better support the worker. In addition, the worker can manually set a preference as to how much support to receive from the robot. More support results in less strain on the worker; less support allows the work task to become a calorie burning fitness routine, and the robot ensures that the worker is maintaining a healthy posture. In light of the preference set by the human, the robot autonomously makes fine adjustments as the collaboration unfolds.

The Gymnast_CoBot approach does not attempt to replace the human worker. Rather, our goal is to leverage robotic technology to tweak work routines in an industrial environment to be more sustainable for a human worker’s physical condition. By allowing the robot to handle physical tasks with potentially dangerous long-term implications, such as heavy lifting and straining postures, the worker can focus on performing operations that are otherwise challenging for robots, like navigating cluttered spaces to correctly allocate items. Our aim is to achieve a level of human-robot collaboration that emphasizes the strengths of both human and robot while redistributing the components of tasks.

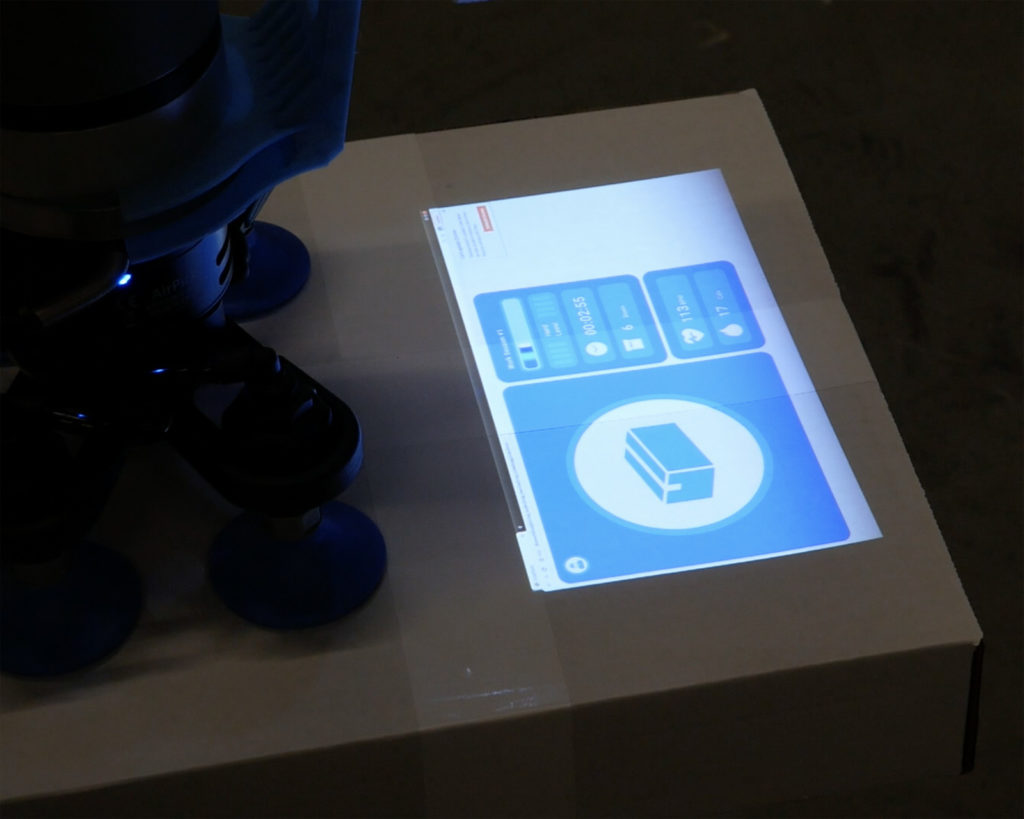

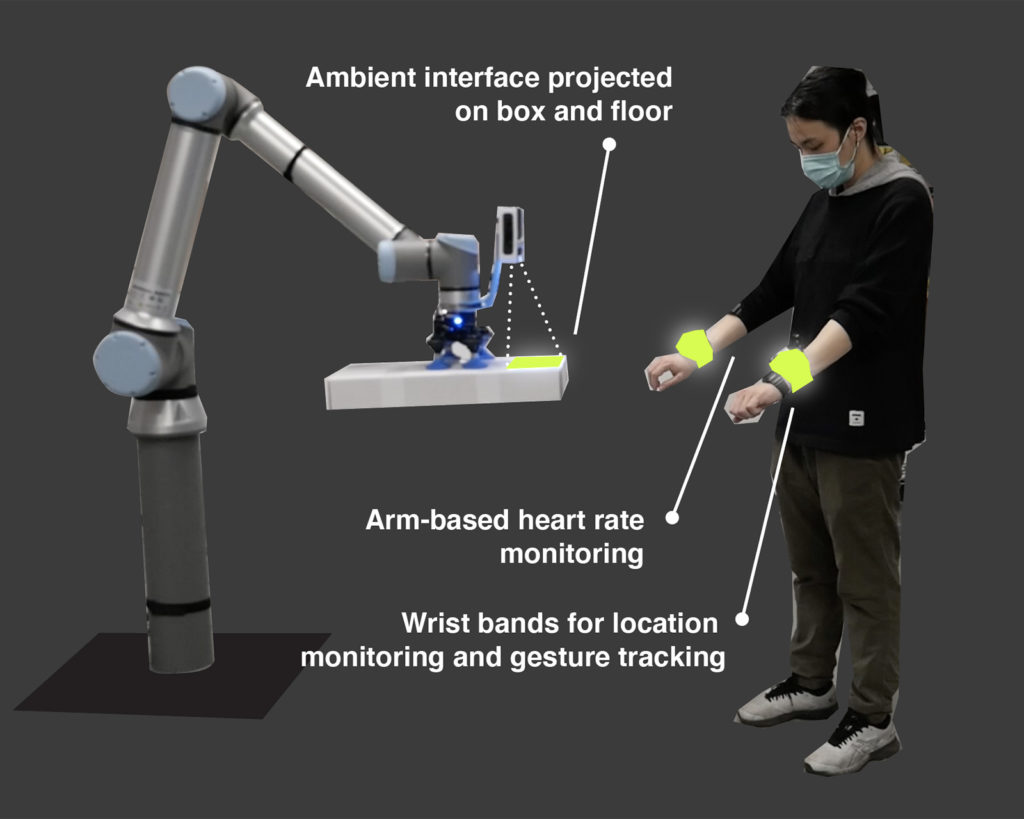

Shared-Workspace User Interface

The robot arm is equipped with a projector that casts the user interface onto meaningful surfaces within the shared work space of human and robot. In this case, the UI is projected onto the boxes handed from robot to human when a box is present, or directly onto the floor at the worker’s feet. The interface presents a small set of useful information for the worker that reveal what the robot is about to do and how the robot ‘sees’ the human worker as well as how and why it adjust its behavior.

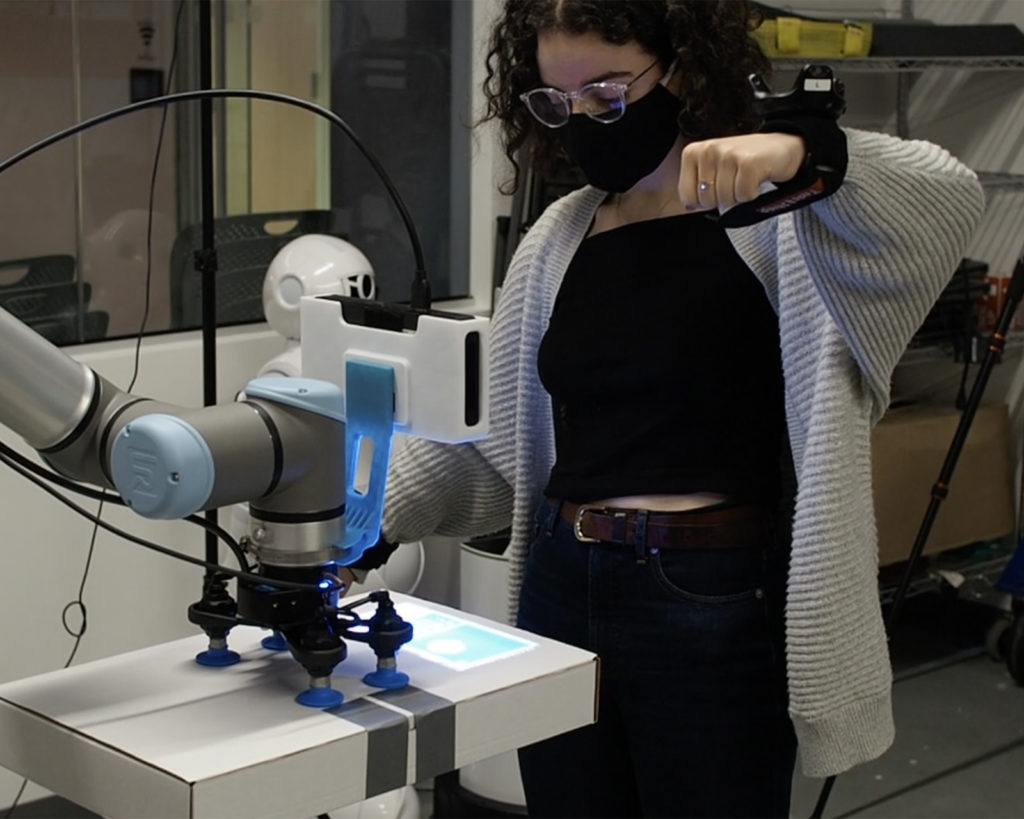

Gesture-Based Interaction

Touchscreens can be challenging in an environment characterized by constant movement, work gloves, and similar constraints. Here, the human interacts with the robot via a small set of arm gestures. Currently, gestures are picked up by continuous tracking of wrist band location in relation to two base stations positioned around the shared workspace. As opposed to camera-based tracking methods, gesture tracking does not require a line of sight, a feature that provides robustness in a work environment characterized by frequent spatial movement.

Sensors

To facilitate the human-robot interaction the system senses height, weight as well as heart rate of the human worker as well as the worker’s location, movement, and wrist locations. Body metrics and real time heart rate are used to determine comfortable hand-over heights and to calculate an estimate of fatigue. Wrist locations are tracked using signal triangulation between armbands and base stations mounted around the work area. Location tracking and resistance tracking on the robot arm are also used to avoid collision.

Dynamic Behavior

Gymnast_CoBot dynamically adjusts its behavior to that of its human collaborator. The robot uses its fatigue estimation of the human collaborator to adjust its work rhythm as well as the height at which boxes are handed over. In addition, the system enables the human worker to express a preference as to how much support the robot should provide. In addition to this expression of preference the robot then continues to assess signs of fatigue to micro-adjust support levels up- or downwards from the set preference.

Task-Specific Helper Robots

One key consideration of the Gymnast_CoBot project is that today’s collaborative robots do not need to be designed to replace human workers. Rather, responsive machines in industrial workplace environments can dynamically work together with humans in a way that robots cover those parts that risk strain, stress and danger for the human, while letting humans take care of tasks that are less strenuous but complex enough to be challenging for a machine but an easy task for a human. We see this kind of robot helper devices implemented along machines such as forklifts or loading decks, to support and facilitate human work.

Gym/Work Machine Cross Fertilization

The original inspiration for the Gymnast_CoBot project is the paradoxical situation that in many cases humans strain and harm their bodies when working with machines in factories while in a very different context, in gyms, sophisticated machines have humans lift and carry heavy weights for their fitness and overall health benefit. We believe there is much to be learned in bringing these two very different contexts into contact with each other. As part of this project we analyzed movements of workers on the factory floor, then compared them to similar movements carried out as part of fitness routines in gyms and developed our helper robot to make the bridge: enable humans to carry out physical work in the factory that is physically sustainable and hopefully even beneficial for their health as is a a workout in a gym.

Team

Kristian Kloeckl (PI), Taskin Padir (PI)

Rui Luo, Patrick Dawson, Mark Zolotas, Zuozheng Zhong, Dipanjan Saha, Salah Bazzi

This project is developed by the Experience Design Lab

and the Institute for Experiential Robotics at Northeastern University.

It is part of the multi-year research initiative “CRISP | Co-worker Robots

to Impact Seafood Processing: Designs, Tools and Methods for

Enhanced Worker Experience.”

This work was supported by the National Science Foundation

under the award number 1928654.

Development of the prototype takes place in our space

at innovation hub MassRobotics in the Boston Seaport.

A thank you to our industry partners:

– Bristol Seafood, Portland, ME

– Channel Fish Processing, Braintree, MA

– North Coast Seafood, Boston, MA

– Raw Seafoods, Fall River, MA